Not Safe For Work (NSFW) AI also facilitates different age groups. The growth of different age groups consuming digital content and the topic in which the content can be categorized in, requires an AI system to be able to filter and identify inappropriate content more accurately and catered to the maturity and sensitivity of each age group as well. Utilizing new data on technology advancements and user interaction, this article outlines effective strategies for adapting AI to protect users of all ages.

Filtering Methods that are Age Appropriate

These AI systems can be adapted to local communities to make it capable of deploying age-specific filtering techniques ahead. AI is made extra strict for younger users, filtering out a large amount of NSFW content that might be inappropriate for viewers of an impressionable age. A top tech company said in a study that their AI systems are trained to block 99% of explicit content for users under 18, such as using image and text recognition algorithms, as these AI systems are monitoring strict standards of safety.

Adaptive Content Moderation

However, children will grow up, and pretty soon their needs to look at the content and understand it, will change, and you will need a more sophisticated way to filter NSFW. Through machine learning, AI systems can evolve with the preferences and maturity of the user as they move from an adolescent to an adult. Sources say that moderation tools that learn from user feedback only about 70% of the hate speech and abuse in the example investigatedModeration tools that adapt and learn from user feedback, once equipped with AI have led to a 30 use 30 percent less complaints of over-censorship but users like when the moderation system can tune in to their age levels as sources say.

User-Controlled Settings

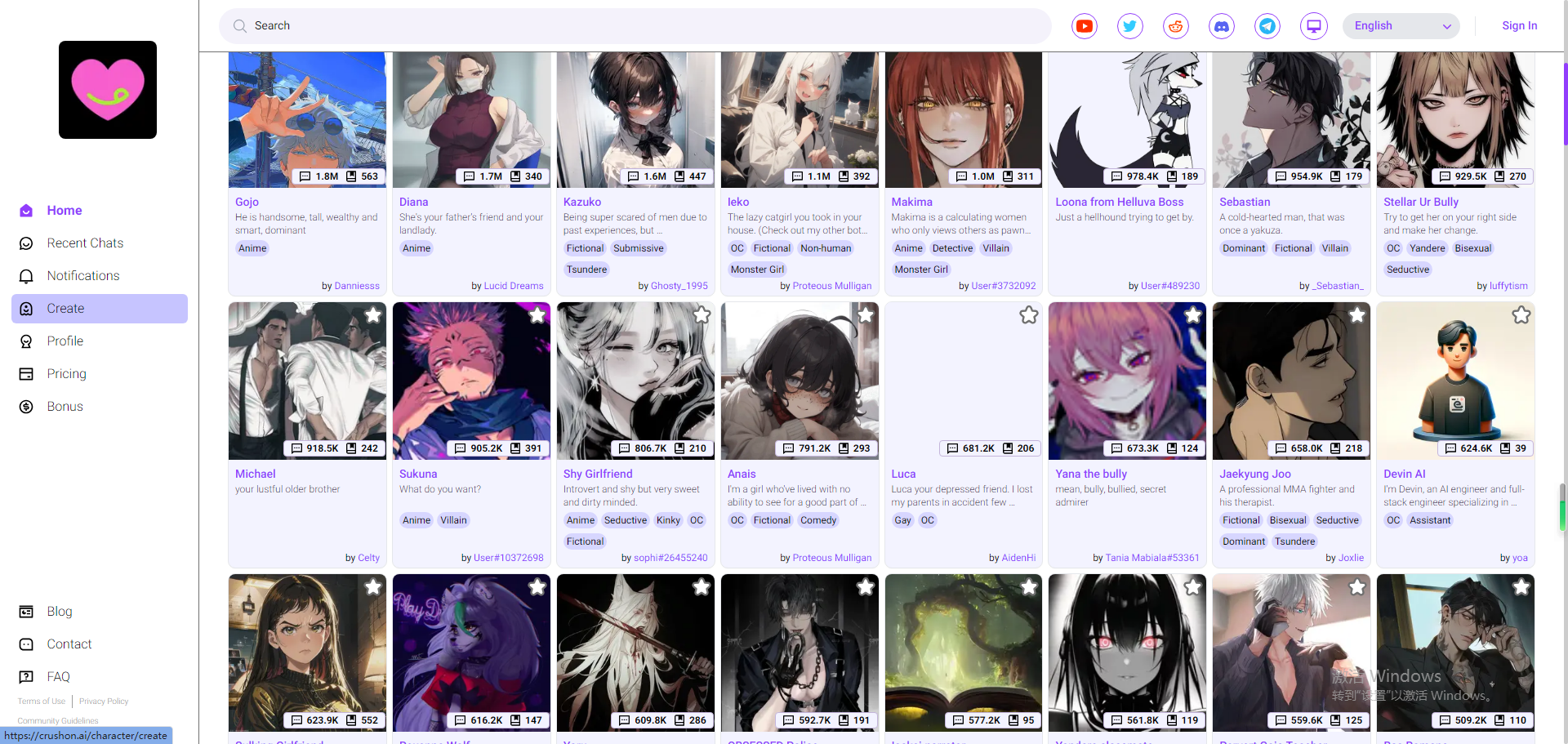

Providing users with AI-driven, customizable options further strengthens NSFW security among various age groups. Systems are integrated with power settings to let users take control and set their limitations according to how comfortable they are compatible with their age limits. Findings show that compliance with recommended safety settings (especially with teens) increases by up to 40% when users can decide which content filters to use.

AI-Powered Education Tools

Artificial Intelligence can also play an educational function, especially with young online wanderers. Age-appropriate bots and tools that use AI to advise and warn users about potentially NSFW content and safety practices. Users who engaged with the AI-driven programs were also significantly more likely to develop good browsing habits, with an improvement in avoiding some of the most damaging online behaviors of up to 50% among users aged 12 to 16.

Learning continuously and incorporating feedback

For all age groups, the NSFW safety of AI as being very high in effectiveness but it improves much if it continuously learns from interactions with users and feedback from each other. AI learns from new data, then adapts to the latest social and legal demands, so it is a continuing process and these systems are always updated. As a result, AI moderation is always up-to-date with cultural and technological developments, which helps it to be more relevant and thus more efficient.

With this, AI intelligently presents appropriate data according to the age group, increasing NSFW safety across digital platforms. This results in platforms that better-mirror the online experience each age demographic demands and necessitates, making a safer and better web for all of its users. Visit the link for more details on the ongoing progress in AI for NSFW safety — nsfw character ai.